Msty - an LLM for everyone

If you've ever been intrigued by the idea of running an AI model right from your laptop, whether for privacy concerns, open-source exploration, or just plain curiosity, Msty might just be the perfect starting point for you.

Today let's look at one of the simplest ways I've found to get started with running a local LLM on a laptop (Mac or Windows). I've been using this for the past several days, and am really impressed. Whether you're interested in starting in open source local models, concerned about your data and privacy, or looking for a simple way to experiment as a developer, Msty is a great place to start.

Last week, we explored Pinokio, highlighting the ease of initiating a local virtual environment to run a local Large Language Model (LLM). Despite the technical jargon, the goal was clear: to simplify the process. It's been quite the journey through the dozens of local LLM interfaces over the past year. We've seen a steady progression in UI/UX as well as ease of getting started. Among these, Msty emerges as the ideal starting point for beginners. While Ollama paves the future of LLM deployments, the simplicity of Msty offers a no-fuss approach, bypassing the complexities of npm install a user-friendly experience:

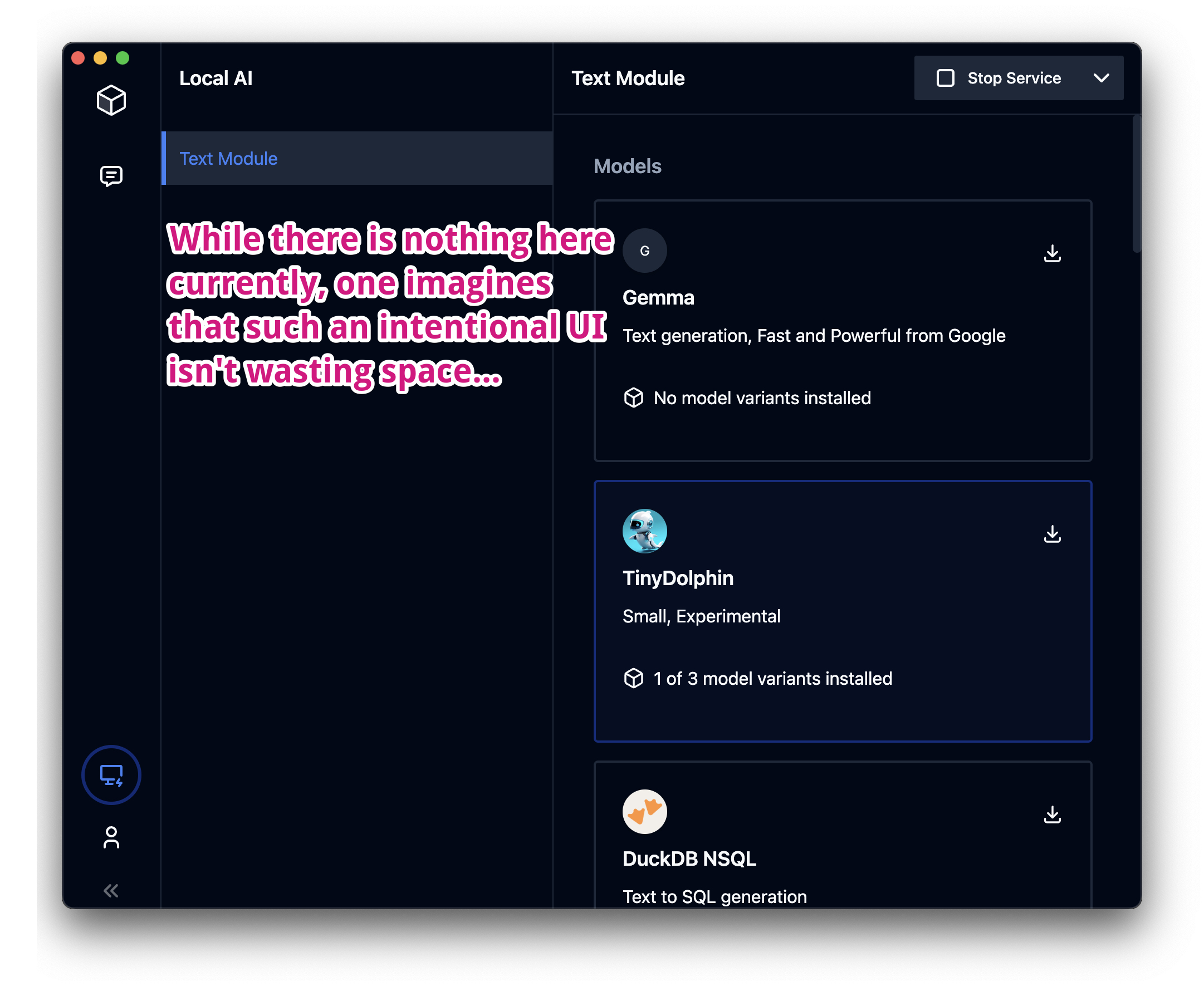

Go to the website > Click "Download"

Install the app the way you normally would.

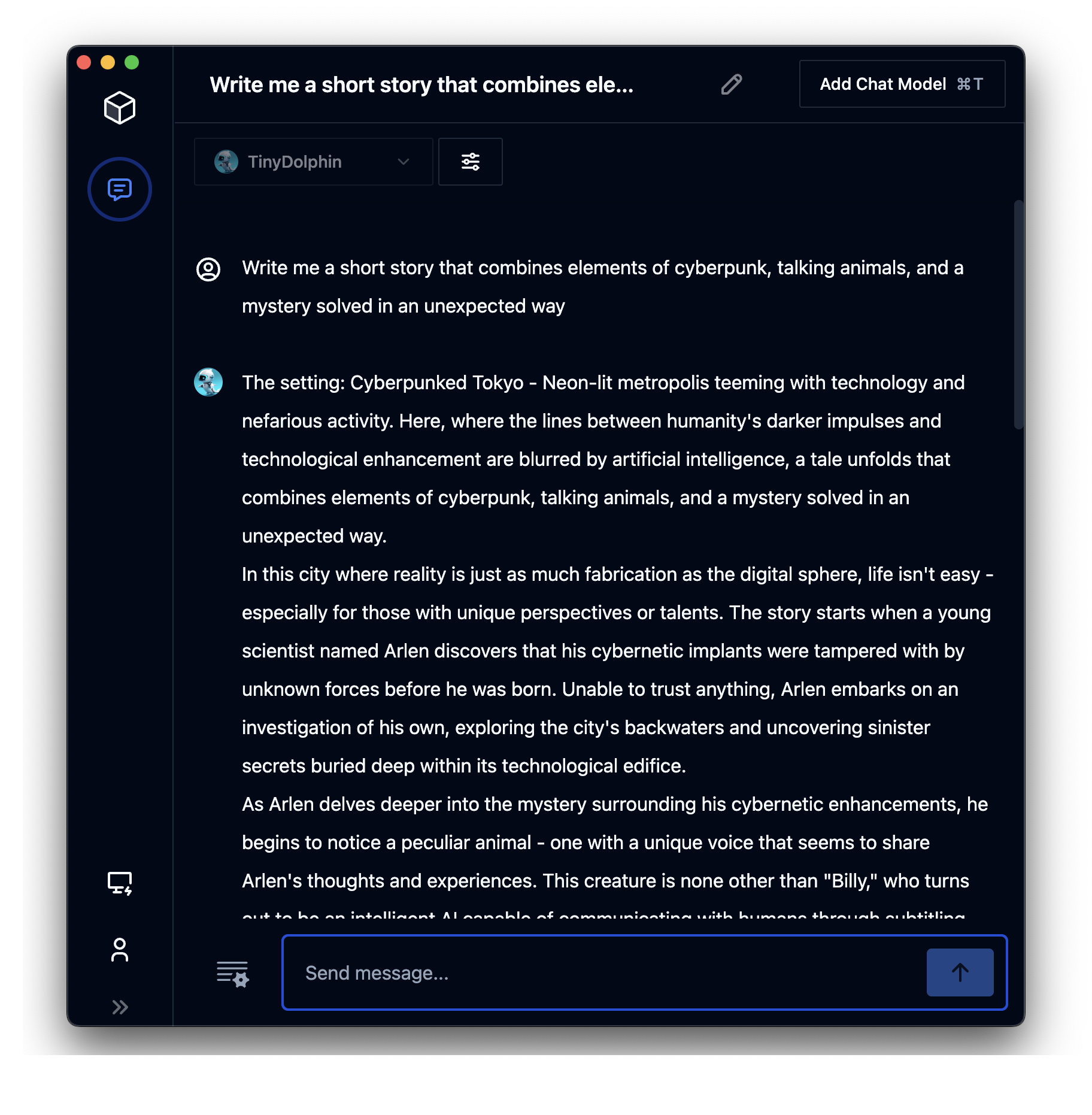

You are now able to use a local LLM. I recommend starting with a "small" model like TinyDolphin. While this model won't be as capable as the larger models, it's important to keep in mind that this software requires some pretty intensive computer power. You'll see faster responses with a smaller model. A large model will output a response more slowly to the point where a model that is too large may freeze your system.

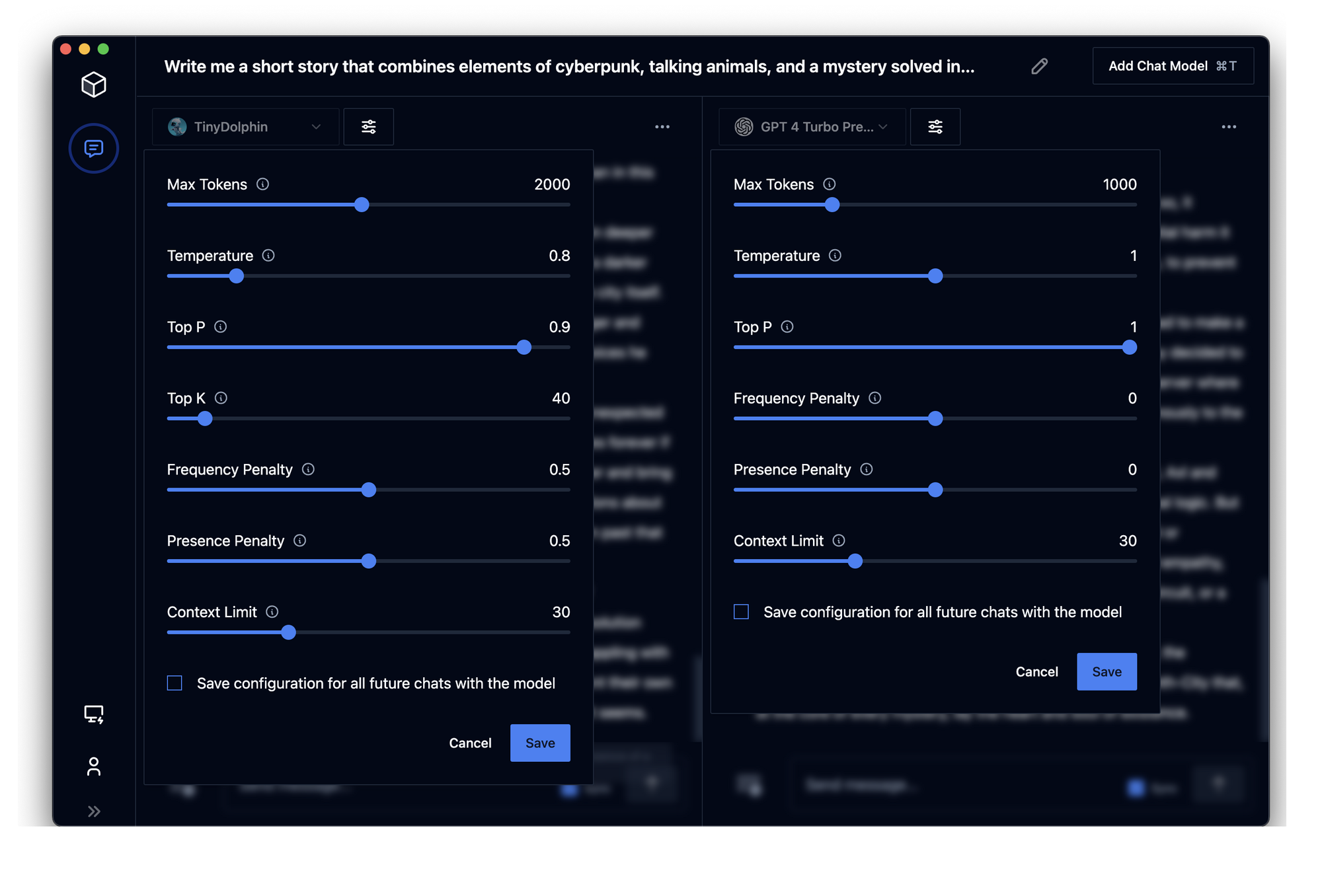

That was easy, right? Msty distinguishes itself by offering an intuitive way to engage with both local and online LLMs. Its platform supports a range of popular AI models, including Mixtral, Llama2, Qwen, GPT-3, and GPT-4. Switching between models is straight-forward and shows how the developers have focused on making things as simple as possible.

For advanced users, the app has a massive prompt library, sticky prompts for recurring tasks, parallel chats with input synchronization for model comparison, and organizational tools like chat folders. Privacy and offline functionality are paramount, ensuring user data remains confidential and accessible without an internet connection. Msty also simplifies the refinement of AI-generated texts, offering easy-to-use options for improvement.

Msty's offline capability, stringent privacy measures, and support for both MacOS and Windows (with Linux support in the pipeline) cater to a broad audience. The application facilitates local model downloads, supports GPU usage on MacOS, and accommodates online models through API keys.

I reached out to Msty's developer team on discord and asked about the possibility of open-sourcing the app as well as mobile platforms. The team responded:

We were recently talking about open sourcing it at some point so yes, at some point we would like to open source it. But we have many new features planned (such as STT, RAG etc) and don't want to get distracted with maintaining an open source project right at this point.

We want to support iOS and Android version down the road. This will be done by shipping a headless version of Msty that you could install on a PC and then adding the IP address/url to the mobile app. We are still few months away for this.

I appreciate the candor and focused-direction the team is taking here and will be following along closely! I do hope an open-source version of the software becomes available as it aligns with their core commitment to accessibility, but do understand the rationale and again, applaud the focus. They also seem to be laying the foundation for a lot more interesting

Msty opens up the world of AI, offering a seamless, privacy-focused, and user-friendly platform for everyone from curious beginners to seasoned developers. It stands out as a gateway to the vast possibilities of LLMs, without the usual technical barriers.