4 Free & Local Tools for AI chatbots (no command line)

If you've been following along you know that making the latest technology available to everyone is one of my primary drivers. Giving everyone equal opportunity helps all of us. Accordingly, the progression in accessibility for the latest LLMs and associated research projects is one of my big focuses this year.

Today I want to look at 4 easy, free, and private ways to work with LLMs. We'll look at the latest and greatest in open-source such as mistral, llama3 and CommandR+. While we'll also look at the proprietary models such as GPT-4, Claude-3, and Gemini, however, to use those tools you will need to have your own API Keys. If that's a step to far, no problem, just stick with the local models mentioned throughout.

Here's the criteria I looked at for measuring the quality of each solution:

- Speed to Chat: Steps from download to having a first conversation

- UI/UX: How easy is the app to navigate, create, and manage chats.

- Privacy: both privacy policies as well as app licenses

- Features: RAG, Tools, Embedding Models, and more

- Specific Use-Cases

Now that we have our criteria, let's explore the apps available for anyone to use today!

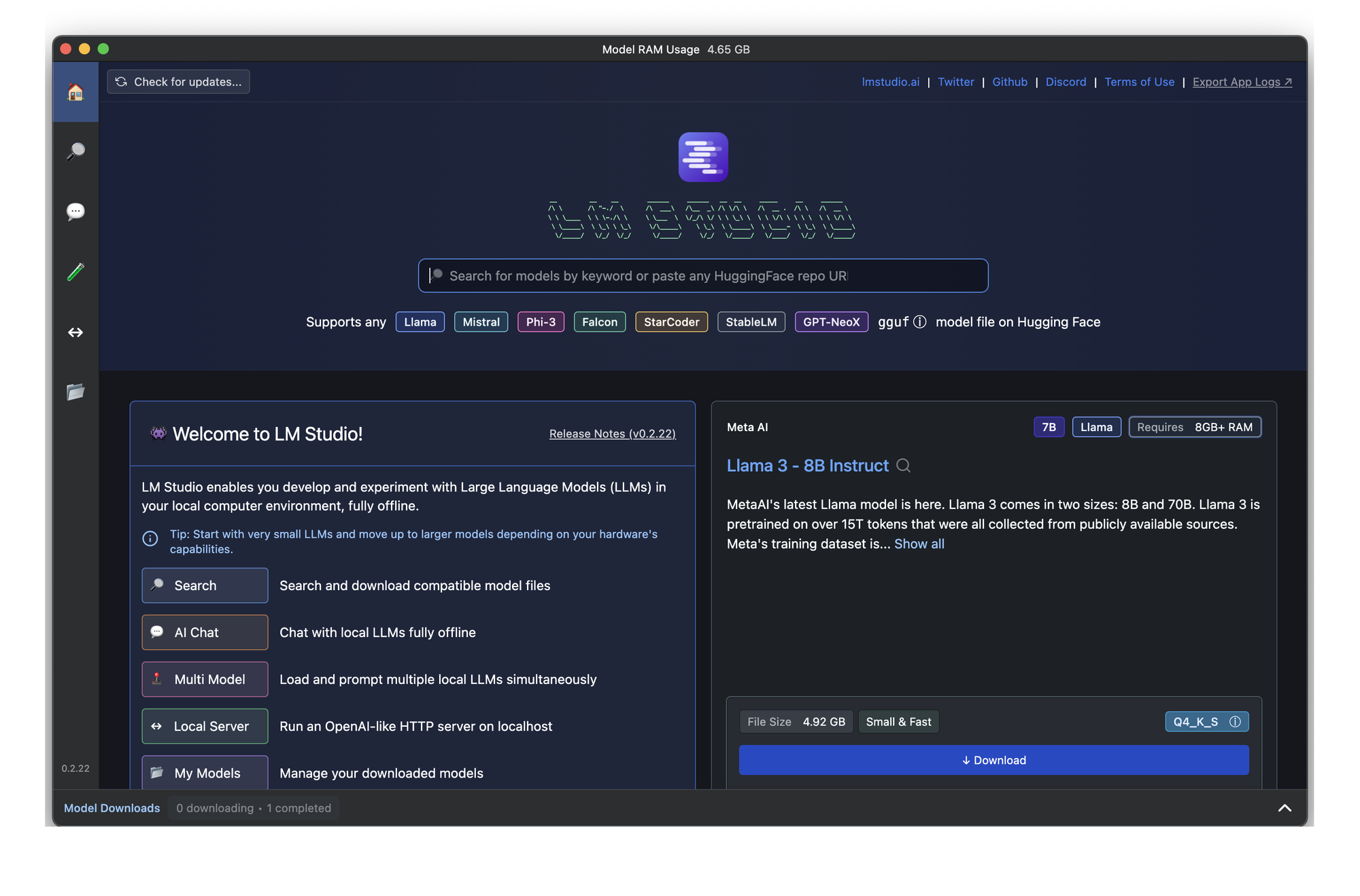

LM Studio

1-click install. No models in included, so you'll have to download the first one. Average UI/UX (but improving). Closed-Source. Privacy statement on homepage. Advanced coding features and comparison playground is a huge plus for LM Studio. Works with any model on hugging face with GGUF. These are downloaded and stored locally. No integration with proprietary or remote models.

Excellent for building and testing your own ideas for an application. As the name implies, you can really fine-tune your LLM here and then have the basic code blocks for direct implementation. My main gripe is the total lack of integration with other local model directories. Every other app on this list integrates with Ollama or the ability to select a model directory. Only LM Studio dictates a specific folder structure, and thus, makes model management with multiple apps a bit of a mess. No RAG available by default, though that's a minor gripe.

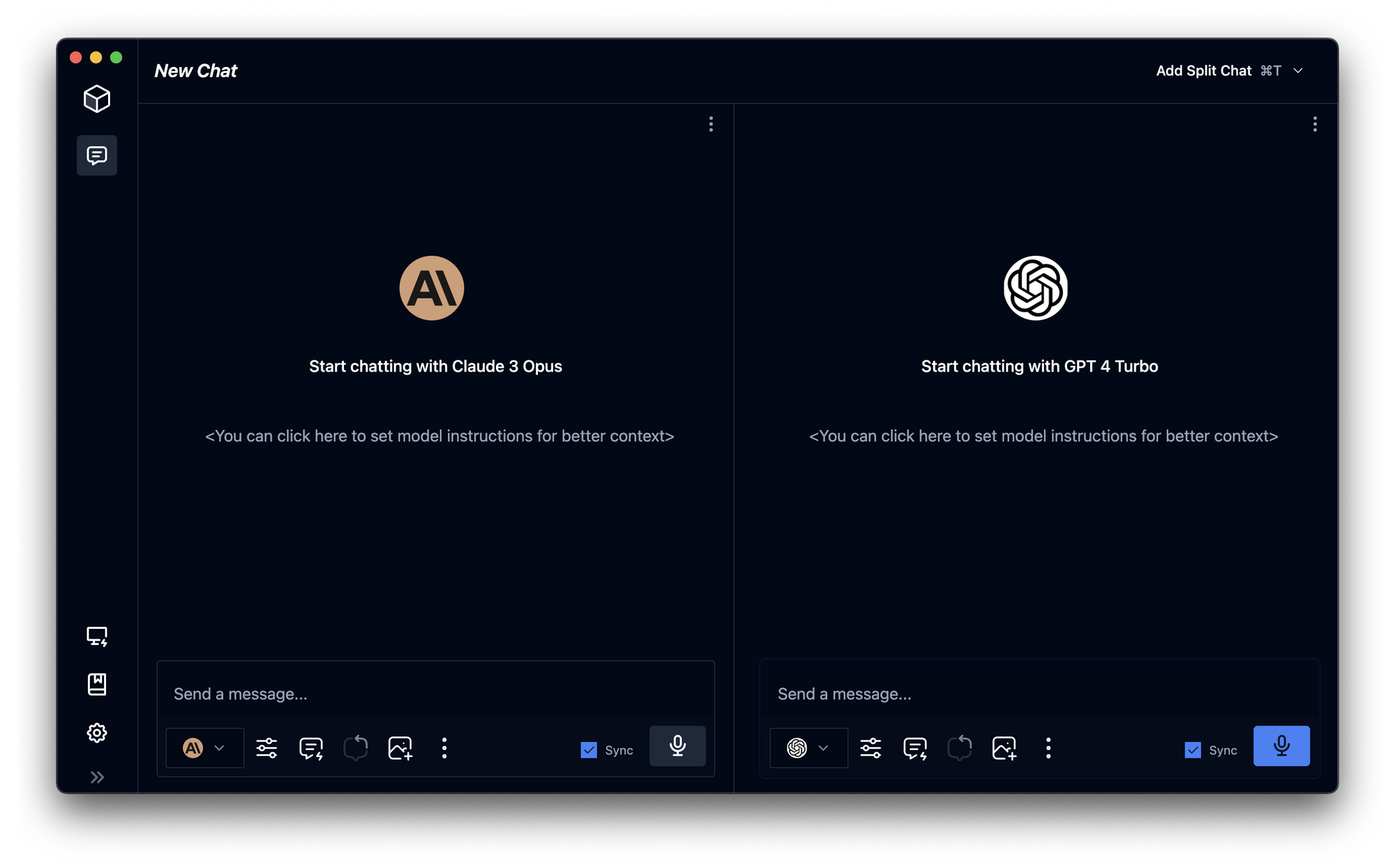

Msty

Msty is another 1-click install. No models included. Can connect to GPT, Claude, Gemini, and Groq via API. Gorgeous UI/UX. Closed-Source. Privacy statement on homepage. Advanced features such as quick prompt library, multiple chats, swapping AI model in-chat, speedy. Great for getting started, quick brainstorming sessions, and for honing your prompt engineering.

I've written about Msty at greater length (feel free to read) and I'm happy to report it's still my daily driver. No RAG, or ability to create embeddings at the time of this writing, but as with all of the apps on this list, development is happening quickly!

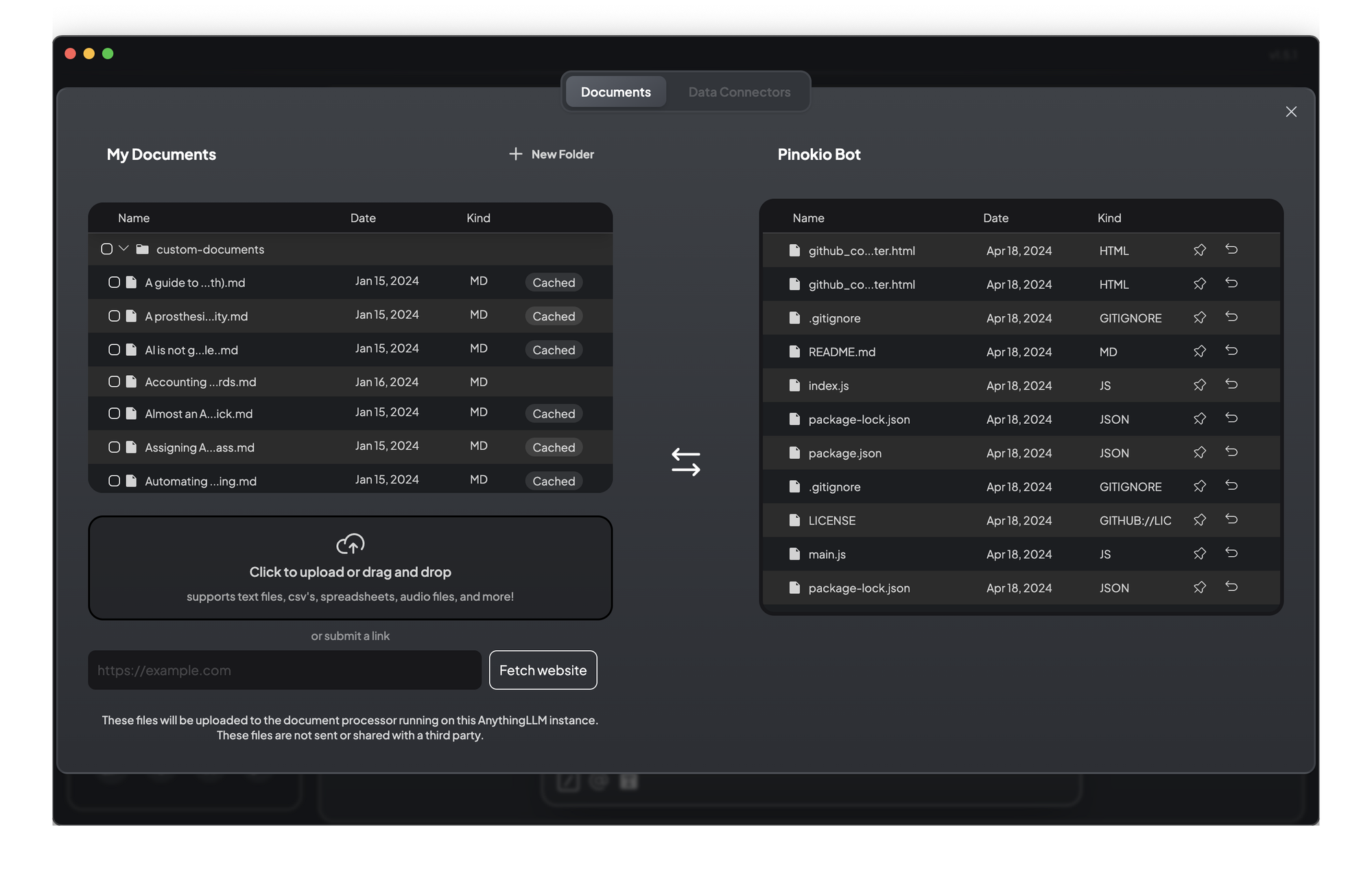

AnythingLLM

AnythingLLM also has a 1-click install. Fastest to get started as it includes a local embedding model, transcription model, and vector database. Works with everything. Literally everything while also allowing you to connect to any open-source software or well-known 3rd party I can think of at the moment. Average UI/UX Privacy statement on homepage. Bonus points for making it the easiest of the group to get going with basic RAG and vector embeddings including "connectors" to Github and others.

One of two open-source projects on the list and this one is the OG. Awesome! The chat interface itself is structured around the idea of "Workspaces" which house the embeddings and history locally. This is powerful and more likely interesting for power-users and business/enterprise scenarios. That said, it offers the most integrations/flexibility of any app on the list. The lack of any advanced tools on the conversation/prompting side of things make it more valuable in testing/building or as a configured web service.

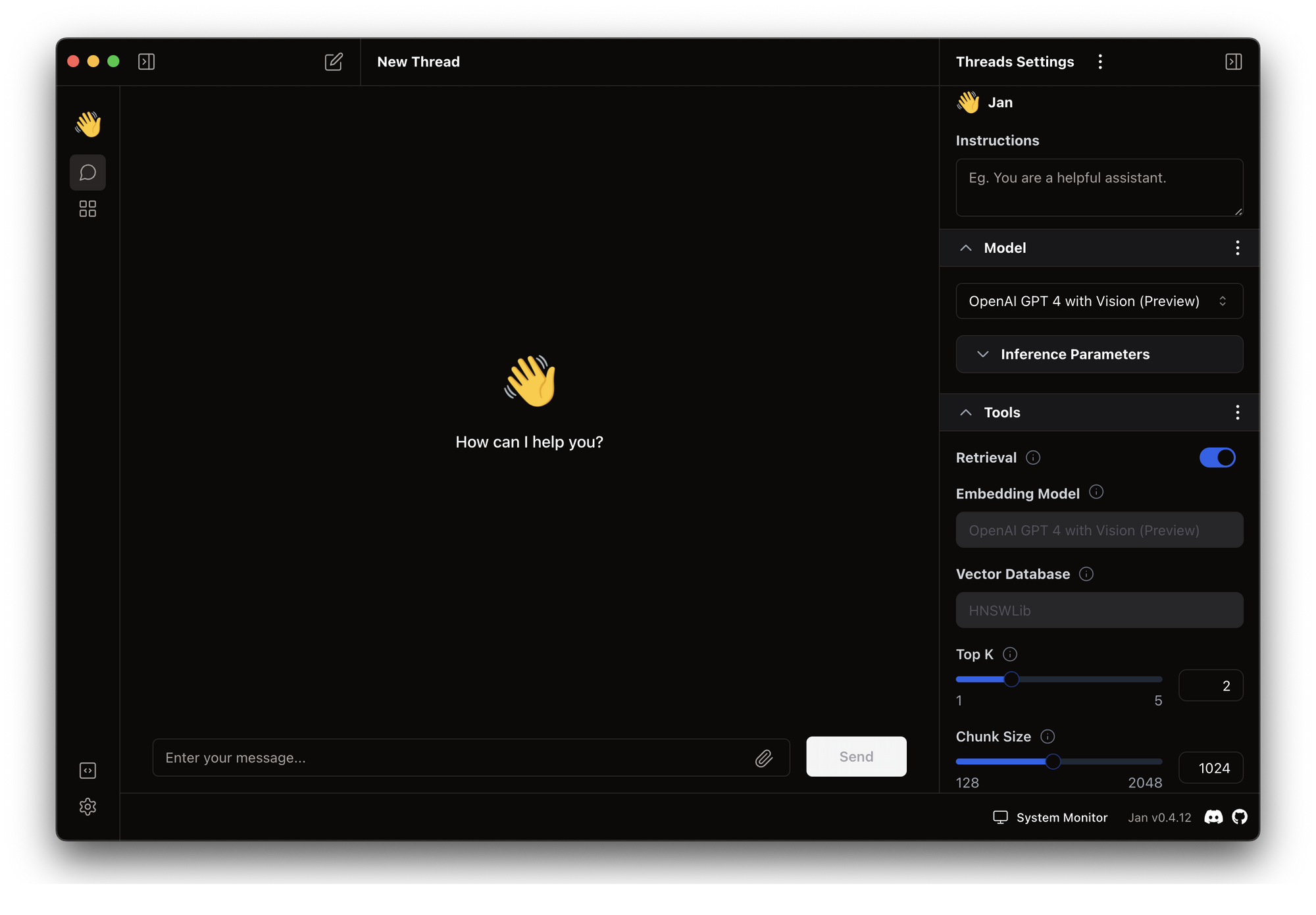

Jan

Jan is a 1-click install. 100% open-source. No model installed by default, but works with local models which can be downloaded from HuggingSpace via a "hub" in the app. This makes discovery of new models fairly easy. The app has a pleasant UI/UX and provides advanced settings in a right-side panel that I found nice to use. It also looks like embedding model configuration and tool support is coming along in the early betas. The only tool right now is a simple RAG that pulls from a template, but I like that the developers are thinking about this already.

The app also connects to remote models such as GPT4 and Claude via "extensions" which allow you to enter an API Key. This is another smart way of doing it though I prefer Msty's simpler, single-panel for all your keys.Jan installs several other directories in the model folder and it can get a bit messy when you're pointing several apps to the same one. I ran into a few issues with this one and I believe it's the newest app on the list. Certainly the newest to me, but an exciting one all the same.

Conclusion

So that's a quick look here at the beginning of May on the easiest apps I've found to install and get started with a chatbot. In testing, the lack of a standard for local model directories. Additionally, some apps support the same architecture as Ollama, while others rely on "GGUF" versions of the file. I personally would like to see all apps offer both options and default to ollama when it's available. Regardless, I'm excited by the rapid development we're seeing across the board.

In the future, I expect we'll have better integration with more than just LLMs. Already, some apps include a local whisper model for speech-to-text. How long before they also have support for image diffusion models as well? A local app that would provide a true ChatGPT experience (LLM helping with prompt creation for the image generation), with tools and long-term memory doesn't seem all that far-fetched.

BUT WAIT! What's available today that might give us a hint?

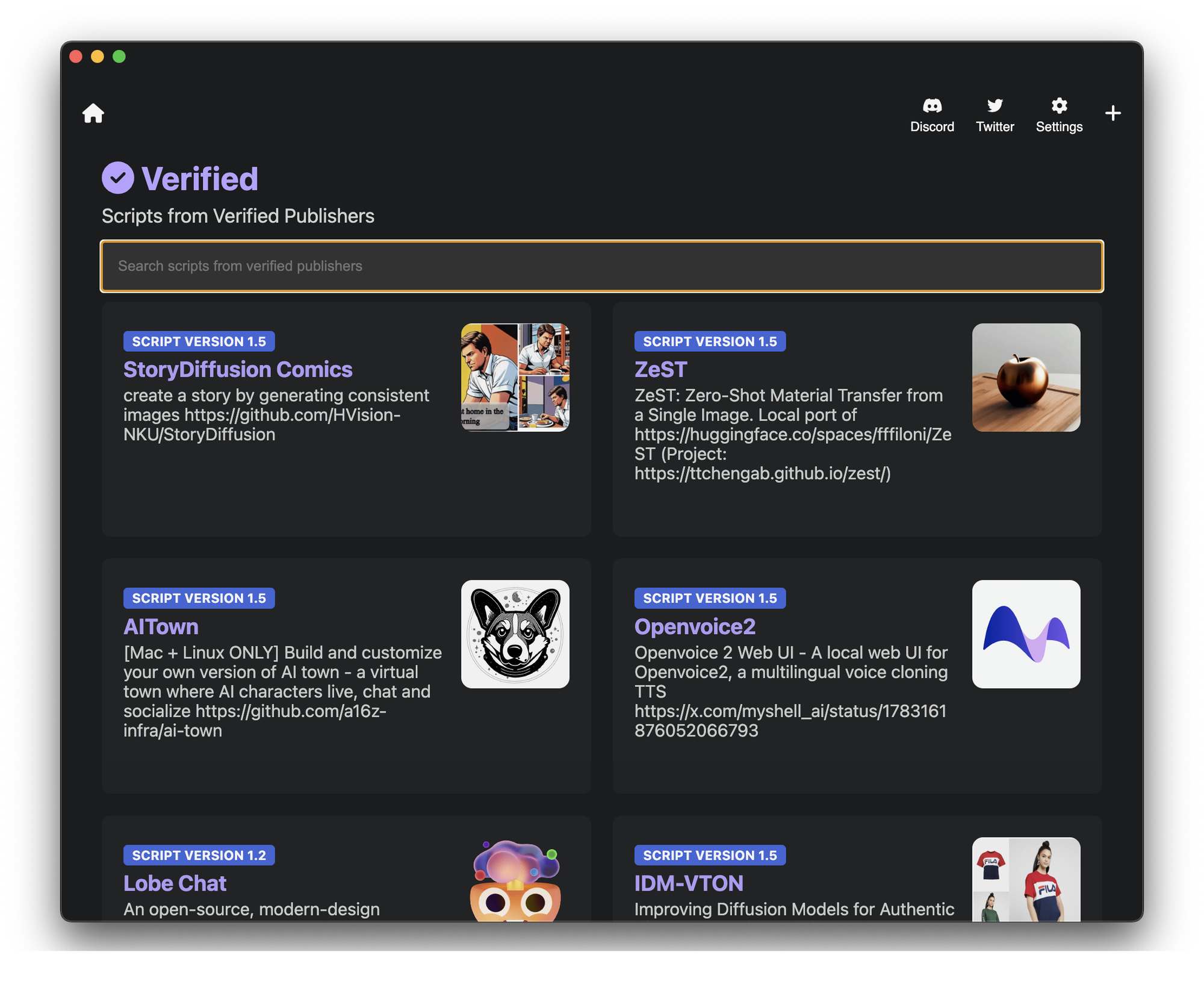

Bonus: Pinokio

Cool project calling itself the "browser for LLMs". It allows you to run just about any open-source python project on the web. Not just chatbots, but translators, image tools, and many of the latest "research demos".

It's crazy cool. I've used it to build a front-end for an audio resolution uscaler and also have been playing around with AI Town (which needs it's own post).

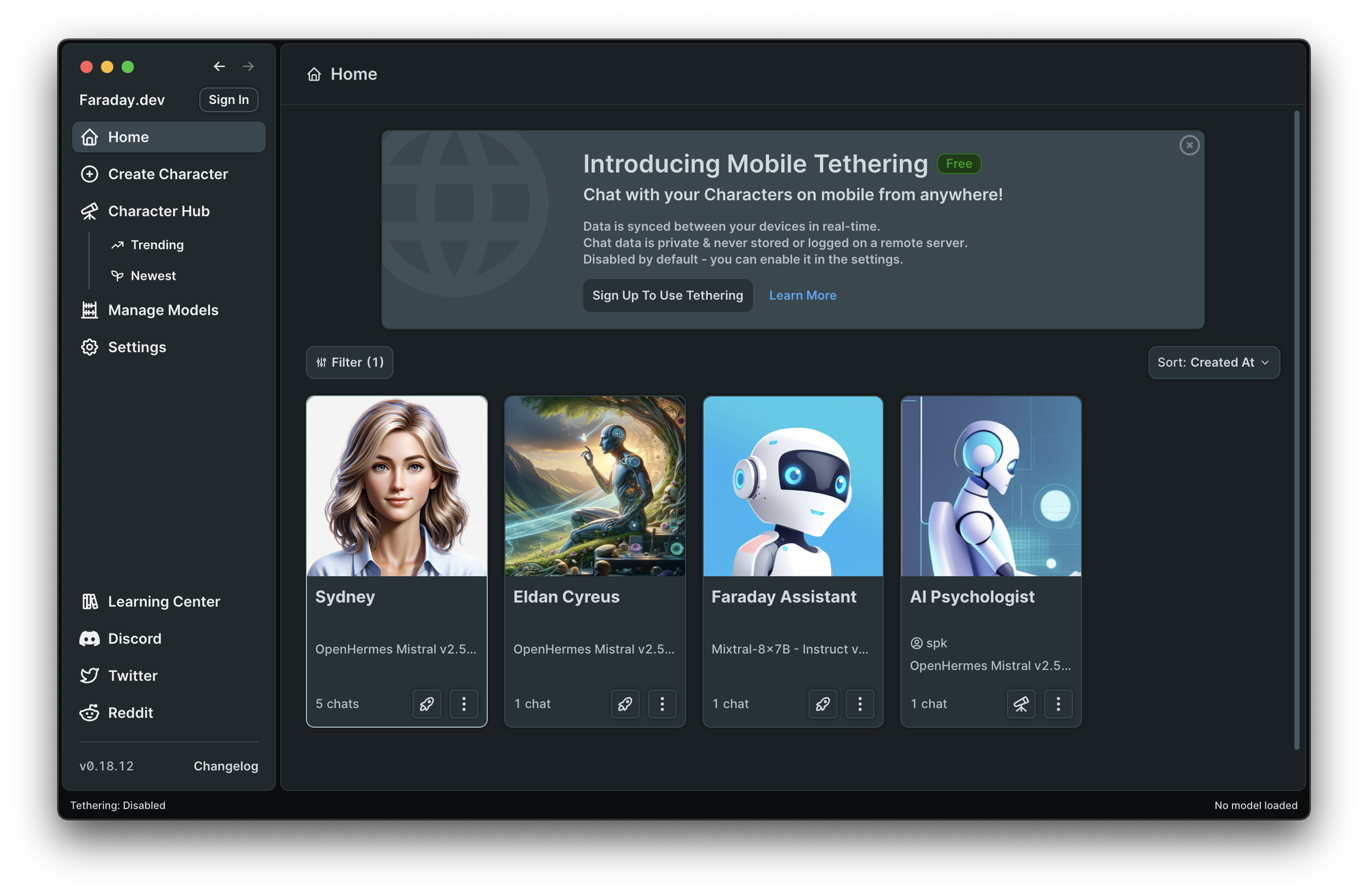

Bonus 2: Faraday

1-click install. No models included. No proprietary/remote models. Same as LM Studio - Works with any model on hugging face with GGUF. These are downloaded and stored locally. Average UI/UX. Closed-Source. Privacy policy page (lots of "Click here" to learn more links that don't go anywhere). Faraday is really focused on roleplaying chatbots. This includes NSFW bots and is important that you be aware those options exist and can be promoted within the app directory. If that's not your thing, thankfully it's a simple checkbox to filter it out. Perhaps in the future they'll have this toggled off by default in the future.

The app makes setting up your local app to serve as a remote so that others can access and chat with the same model is a unique feature. Though it requires you to setup a "cloud" account. Many of the additional features of this app require or recommend the cloud account, which I haven't tested.

The approach of "creating a character" leads to different interfaces/framing of the same technology as LM Studio. In some ways, this can make it easier to create more-detailed prompts and examples for the chatbot to use. The open directory also allows you to easily share or import someone else's settings and model. They also have automated text-to-speech with the ability to select a voice, add Word Info or "Lorebook", even give parameters for the type of grammar that will be used. You get the idea.