This is the fourth post in my ongoing series of exploring AI-assisted coding ahead of o3 Pro and o4. Check out the initial overview, best practices for AI coding, and my first review on Cursor and Windsurf.

Repo Prompt - might seem like the dark horse candidate here, but I'm putting it near the top of the list so you'll actually give it a try. Once you understand it's workflow, Repo Prompt becomes invaluable for developing and integrating new features smoothly into your project.

The power of Repo Prompt is 3-fold. The first comes from easy file section. Repo Prompt makes it easy to quickly select files or folders to include in your AI prompt, ensuring the right context for your feature. There are lots of helpful tools here to show you how many tokens each file is using and what your total token count is going to be for the prompt. It also creates a file tree that provides the chatbot with the files that exist even if they're not included in the prompt. If all of that sounds too complicated you can also choose an AI model with a large context window to parse your repository and decide the most helpful files to include with your prompt.

The second powerful feature of repo prompt also has to do with workflow. Repo Prompt encourages users to first PLAN code changes or features. Add your file tree and key files, write the specific thing you want into the prompt box then select PLAN. At this stage, use the best, largest and "most thinking-est" model available. In the time before o3-Pro I recommend Gemini 2.5 pro-EXP or Claude 3.7 Thinking MAX. These models can really understand complex issues well. I rely on Gemini more because they are much less expensive and have a larger context window.

I'll also mention o1-Pro here. This is an insanely good model and though it's API isn't available on all of Open AI's pricing tiers, access has expanded over time.

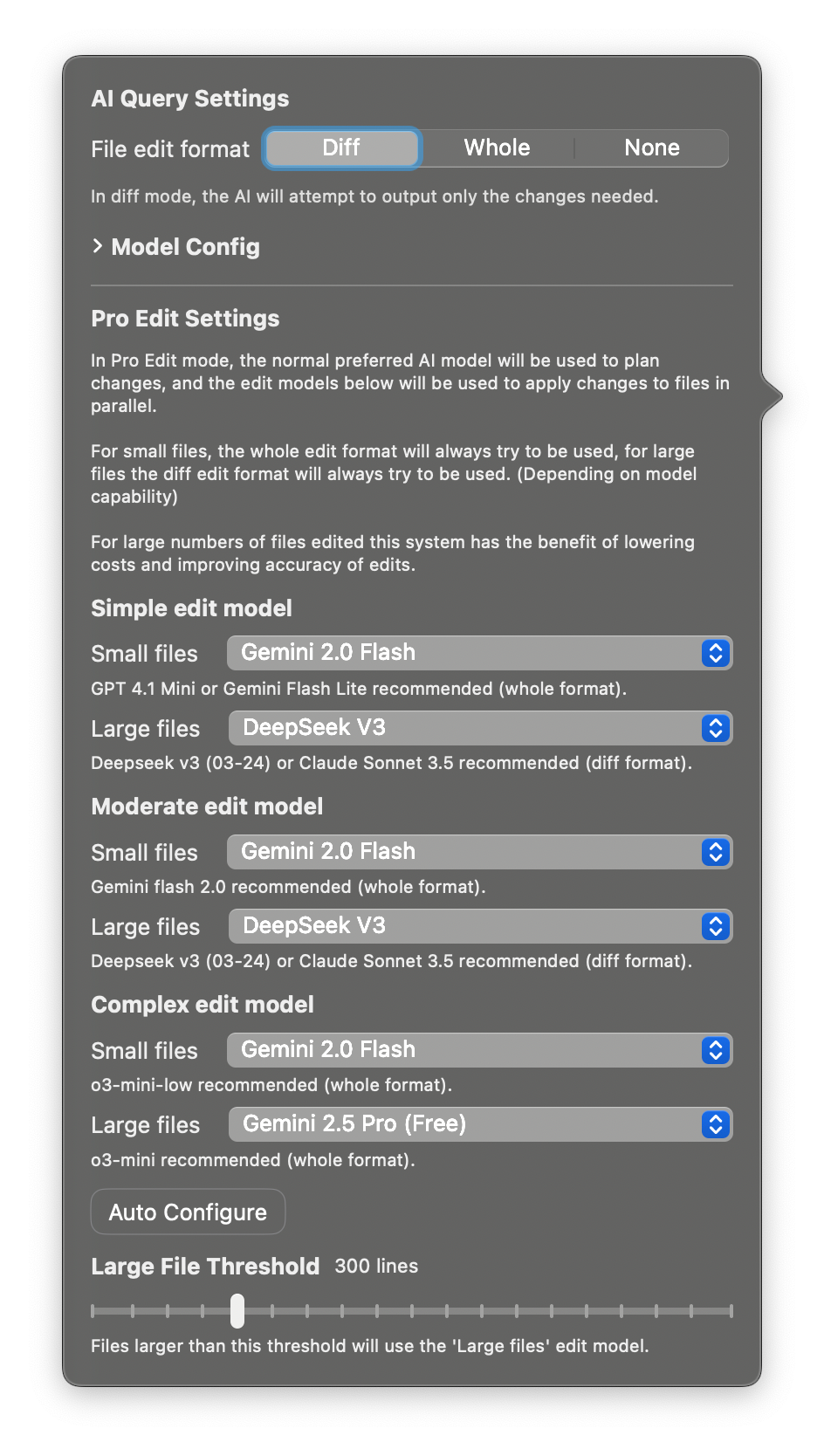

In ACT Mode, Repo Prompt will scan the files and assign very specific commits rather than full file rewrites. Repo Prompt's third superpower is it's ability to delegate coding tasks to specific models based on commit complexity and file length. This has massive implications on the performance and cost. While only available in Pro mode, this is a killer feature if you're going to be working with a large codebase regularly. Here's a screenshot of how I have mine configured at the moment. Note that I'll change this up slightly depending on the complexity and type of feature I'm developing.

Now, once I start my chat, I use the "most thinking-est model" as my lead engineer to map out the feature request from the architect and then pass those over to other smaller, faster, cheaper models to implement the edits. They can run even run in parallel. This ends up saving a ton on cost and avoids having a thinking model decide to take off rewriting multiple files on what should be a line or two of code.

All changes are helpfully displayed in an apply window for review. You can accept or reject any individual code change. This is fantastic for making granular changes without the risk of an entire file being overwritten by an ambitious LLM.

Repo Prompt demands a bit more effort up front, but the payoff is substantial. It's the difference between project success and AI slop! The extra care ensures you end up with exactly the app you envisioned. As a non-coder I've found that as a project grows I need to slow down, get organized, and do the work of making the codebase digestible for me and future LLMs. No tool does this better than Repo Prompt at the moment and I highly recommend you give it a go for your next project.